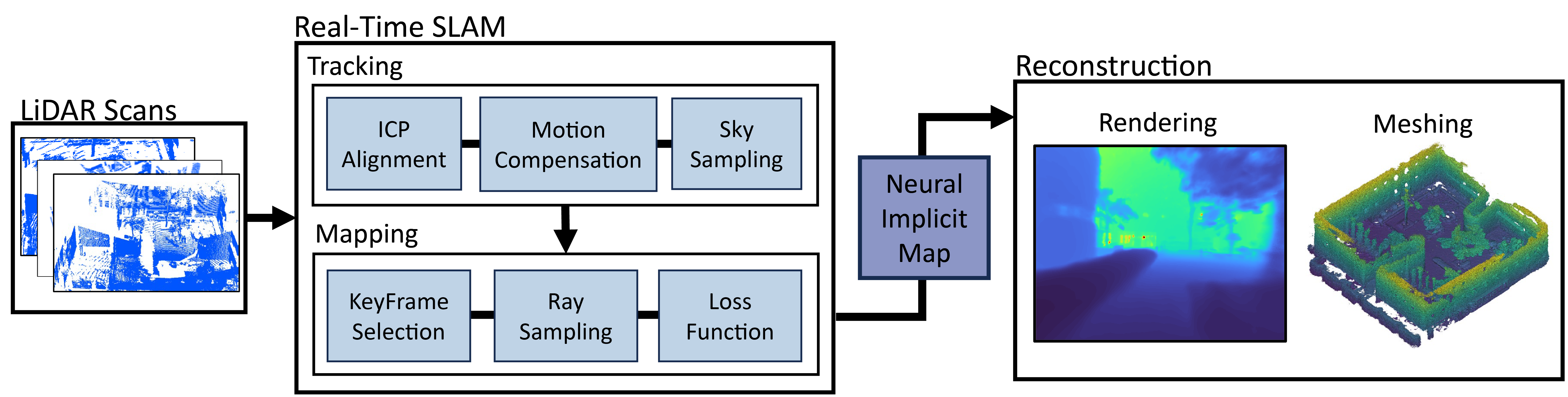

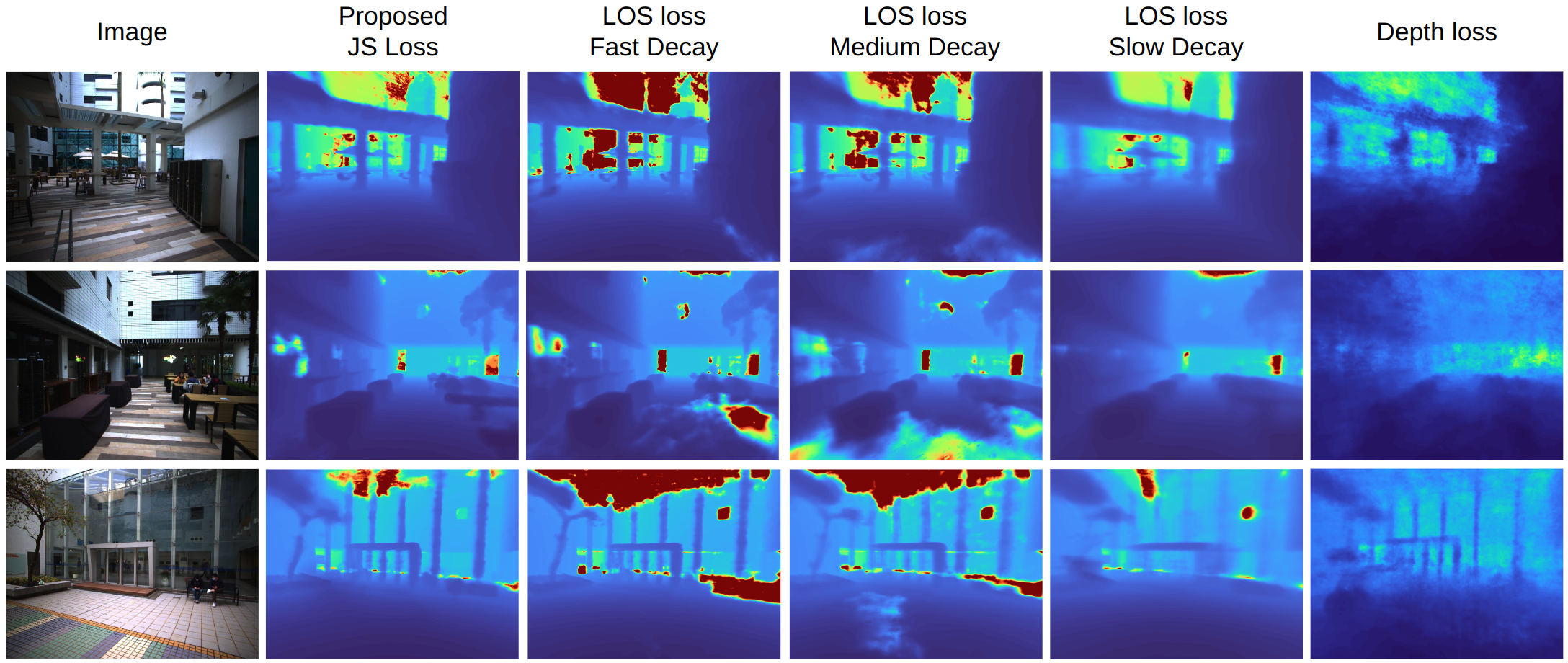

LONER 🚶

LiDAR Only Neural Representations for Real-time SLAM

RA-L 2023 Best Paper

Seth G. Isaacson*

Pou-Chun Kung*

Mani Ramanagopal

Ram Vasudevan

Katherine A. Skinner

sethgi@umich.edu

pckung@umich.edu

srmani@umich.edu

ramv@umich.edu

kskin@umich.edu

* Equal Contribution

All authors are affiliated with the Robotics Department of the University of Michigan, Ann Arbor.

Paper

GitHub